Many machine learning packages require string characteristics to be translated to numerical representations in order to the proper functioning of models. Categorical string characteristics can represent a wide range of data (e.g., gender, names, marital status, etc.) and are notoriously difficult to preprocess mechanically. This post will go over one such Python-based framework that is designed to automate the preparation tasks that are required when dealing with textual data. The following are the main points to be discussed in this article.

Table of Contents

- The Problem with Automated Preprocessing

- Challenges Addressed by the Framework

- The Methodology

- Evaluation Results

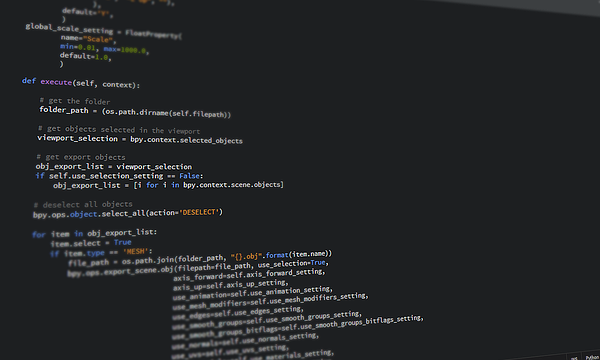

- Python Implementation

Let’s start by discussing general problems we face when we aim to automate the process.

The Problem with Automated Preprocessing

Real-world datasets frequently contain category string data, such as zip codes, names, or occupations. Many machine learning techniques require such string features to be translated to a numerical representation in order to function properly. The specific processing is necessary depending on the type of data. Latitudes and longitudes, for example, may be the ideal way to convey geographical string data (e.g., addresses).

Data scientists must manually preprocess such raw data, which takes a large amount of time, up to 60% of their day. There are automated data cleaning technologies available, however, they frequently fail to meet the enormous range of category string data.

John W. van Lith and Joaquin Vanschoren provide a framework titled “From string to Data Science” for systematically identifying and encoding various sorts of categorical string features in tabular datasets. We will also examine an open-source Python implementation that will be tested on a dataset.

Challenges Addressed by the Framework

This approach handles a variety of issues. First, type identification seeks to discover preset ‘types’ of string data (for example, dates) that necessitate additional preparation. Probabilistic Finite-State Machines (PFSMs) are a viable approach that can generate type probabilities and is based on regular expressions. They can also detect missing or out-of-place entries, such as numeric values in a string column.

The framework also addresses Statistical type inference, which uses the intrinsic data distribution to predict a feature’s statistical type (e.g., ordinal or categorical), and SOTA Encoding techniques, which convert categorical string data to numeric values, which is difficult due to small errors (e.g., typos) and intrinsic meaning (e.g., a time or location).

Now let’s see in detail the methodology of the framework.

The Methodology

The system is designed to detect and appropriately encode various types of string data in tabular datasets, as demonstrated in Fig. To begin, we use PFSMs to determine whether a column is numeric, has a known sort of string feature (such as a date), or contains any other type of standard string data. To correct inconsistencies, relevant missing value and outlier treatment algorithms are applied to the complete dataset based on this first categorization.

Columns with recognized string types are then processed in an intermediate type-specific manner, while the other columns are categorized according to their statistical type (e.g., nominal or ordinal). Finally, the data is encoded using the encoding that best fits each feature.

String Feature Inference

The initial stage was to build on PFSMs and the probability …….

Source: https://analyticsindiamag.com/a-guide-to-automated-string-cleaning-and-encoding-in-python/